IMPORTANT NOTE: This blog post is no longer current. Please visit the latest version of this blog in the official Microsoft Graph Data Connect for SharePoint blog in the Tech Community web site.

0. Introduction

If you have a large SharePoint tenant (with hundreds of thousands of sites), you might run into some issues while trying to use Microsoft Graph Data Connect for SharePoint to run the Oversharing template provided as a template.

To help you on this project, I collected here a few tips to smooth the process and avoid a few of the common issues large enterprises might hit when running this for the first time.

1. Azure and Office Administration

To pull the data from your tenant, you will need to enable the Microsoft Graph Data Connect in the Office Admin Center, create several resources in the Azure Admin Portal and then authorize the Application to pull data back in the Office Admin Center.

In large organizations, it is likely that the Office Global Administrator role is enabled only for a few people in the organization. Also, making Office 365 configuration changes in production will require an internal approval process that could take days or even weeks.

Also, there are various Azure Administrator roles required to create an Application Registration, create a Resource Group, create a Storage Account, grant permissions to Storage Accounts, create a Microsoft Graph Data Connect Application and create a Synapse workplace. In a large enterprise, it is likely that these will be delegated to different people and internal approval processes might be required.

In general, it helps if you know exactly what configuration change you need. I wrote a step-by-step guide at https://barreto.home.blog/2023/08/22/step-by-step-gathering-a-detailed-dataset-on-your-sharepoint-sites-new-consent/

This only covers the scenario of the SharePoint Sites dataset, but the pre-requisites are the same for all the Microsoft Graph Data Connect datasets.

2. Regions

Large corporations are more likely to use multiple Azure and Microsoft 365 regions. Microsoft Graph Data Connect requires you to setup your Azure resources in a region that matches the Microsoft 365 region for your tenant. That includes both the Azure Storage Account and the Azure Synapse Workspace.

You can see details at https://learn.microsoft.com/en-us/graph/data-connect-datasets#regions

If you try to pull from an Azure region that does not match the Microsoft 365 region, you might get an empty dataset. The SharePoint team is adding improved error messages to clearly state the situation and suggest using another region.

Large corporations are also more likely to have a Microsoft 365 tenant that have SharePoint sites in multiple regions. For those, the tenant must configure Microsoft Graph Data Connect to pull each region separately, pulling the data into separate Azure Storage Accounts.

After the data is transferred to the tenant, they will have a set of files across multiple storage accounts, entirely under their control. They could potentially consolidate the data into a single storage account, a single SQL Server or other storage locations, in accordance with their compliance requirements.

3. Using the Oversharing Template

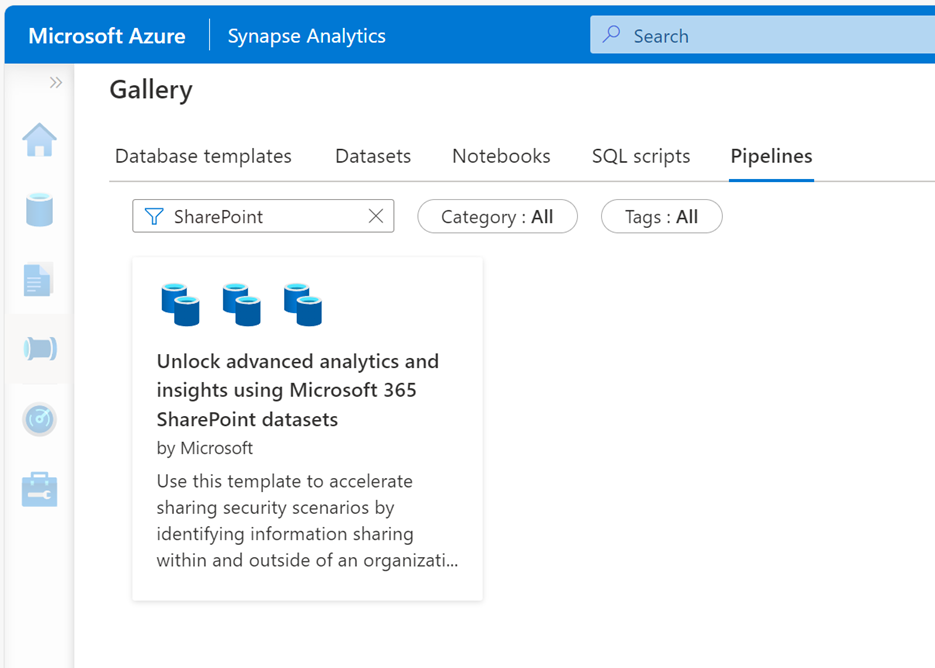

The Microsoft Graph Data Connect for SharePoint team provides a convenient Oversharing template that you can pull from the Azure Synapse Gallery. There are detailed instructions on how to get started with this template at https://go.microsoft.com/fwlink/?linkid=2207816

That includes how to import the template into a new pipeline in Azure Synapse from the Gallery.

The initial configuration for this might seem a little complex, so I would strongly recommend that you use the previous step-by-step for Sites before venturing into this one. This will also give you the two linked services needed for source (Office 365) and sink (Azure Storage), configured with the correct application credentials. You can re-use them when pulling the template from the Synapse gallery.

4. Running a Single Large Pipeline

The Oversharing Template is a single pipeline that brings in 6 distinct datasets and combines them into an Oversharing table that includes all the permissions and group details. This is very convenient, particularly if you want to schedule it to run regularly. The pipeline includes six “Copy data” tasks to bring down the datasets required, plus two “Notebook” tasks to process the group expansion.

When you run that pipeline for a large enterprise, it might take a few hours to complete. Also, if you’re running it for the first time, you could hit some issue (a missing permission, for instance) and that could require you to troubleshoot and run the pipeline again. This might lead you to pull down a large dataset more than once.

To avoid that, you could break your pipeline into separate steps, instead of having all of them connected. This will allow you to test each individual task before running the entire process as a single pipeline. Once you have every “Copy data” task running successfully, you can run the two “Notebook” tasks to process the AAD and SPO group expansions.

5. Dates and Paths

One of the interesting aspects of the Oversharing template is that it uses parameters to indicate the date that you are using for dataset extraction and the location in Azure Storage where you are landing the data.

If you are bringing each dataset down one at a time, you can use these paths to verify the resulting dataset before running the next task. Note that there is also a “latest” folder where the combined data will land when you run the aggregation notebooks.

6. Power BI Limits

The final step for Oversharing reporting is to pull the resulting datasets (which is basically a folder with a few JSON files in it) from Power BI so you can create your own analytics. Power BI is an amazing tool that will make it easy to pull lots of data and do all sorts of aggregation and summaries, both as reports and as charts.

However, if your tenant ends up outputting tens of millions of rows or more, it is possible that Power BI won’t be able to handle that large dataset, particularly if your PC does not have a lot of memory. From my experience, Power BI Desktop running on a fast PC with 32GB of RAM can typically handle a few million rows of data. If you have more than that, you will need to do some preparation work outside Power BI.

One option is to run a Synapse Notebook to do some data aggregation before you pull the data into Power BI. For instance, you could summarize the permission information per site and pull that summary into Power BI. This will require some additional work in Synapse, and you will have less flexibility on how to pivot the data in Power BI.

Another option is to load the data from Azure Storage into an SQL Server database. Then you can point Power BI to that database, which will scale much better than using JSON files. You will also be able to write SQL queries in Power BI as you pull the data, keeping this flexible.

In Azure Synapse, you can use a “Copy Data” task to move the data from JSON to SQL. You can also use a “Data Flow” task to transform the data. Moving to SQL will also require you to flatten (normalize) the Permissions dataset, which has multiple users/groups in a single permission object.

Note that you must first land the Microsoft Graph Data Connect dataset in an Azure Storage account before you can transform it and/or move it to SQL Server.

After you download all the datasets, expand group membership information for both Active Directory and SharePoint, flatten out the permissions dataset and upload everything to a SQL Server database, you will be able to report from Power BI connecting to that database.

7. Deltas

Once you get your first full pull from Microsoft Graph Data Connect for SharePoint, you will want to update it regularly. You can run the pipeline again, but this would cause you to redownload all the datasets in their entirety.

To reduce costs, you should investigate the use of “Delta” dataset pulls, which will reduce the number of objects downloaded, pulling just what change since your last pull. Some restrictions do apply. See details at https://barreto.home.blog/2023/01/27/sharepoint-on-mgdc-faq-how-can-i-use-delta-state-datasets/

8. Microsoft Entra ID Groups

The Oversharing template includes three Azure Active Directory (Microsoft Entra ID) datasets to get information about AAD Groups (one object per group), AAD Group Owners (one object per group owner) and AAD Group Members (one object per group member). It is important to note that these are not SharePoint datasets.

The main difference here is that these datasets are collected at the time of the request, unlike the SharePoint datasets, which are collected and pre-processed daily, during the tenant’s off-peak hours. There are two main consequences of that:

- These AAD Group datasets can take several hours to deliver for a large tenant.

- “Deltas” are not available for AAD datasets.

9. Conclusion

I hope this post helps with your deployment of the Oversharing template at your large enterprise. For more information, check my overview post at https://aka.ms/SharePointData.

Hey Jose, Thanks you so much for your time to share information like this. It’s very helpful when getting started on this journey. My question is about your experience working with large organizations and cost. Could you give a range of cost for an environment with 400.000 SPOODB sites, 340,000 users, and 376,000 groups? I also assuming based on this size a SQL server will be needed. Thank you for any insights you can provide.

LikeLike

Hi Jon!

In general, I would follow the table provided in this blog. From that table, take the numbers for 100K sites with Average collaboration and multiply by 4 to get the estimate for 400K sites. You can make some adjustments since you already know the number of groups (seems low for that size organization).

It seems like you will end up with 21M+ permissions, which will likely be too much to load directly into Power BI from a set of JSON files. Yes, at this scale, you probably want to load the data into a SQL Server as described in the article.

Jose

LikeLike

Thank you for your reply. I have read through that article and did the best I could to estimate. However, with the Information oversharing template setup they have additional datasets and a few more azure services, so that is going to up my cost. I was just curious if you have seen other organizations implement this and what it was costing them. Thank you

LikeLike

Hi!

Aside from the SharePoint datasets, the Oversharing templates uses some Microsoft Entra ID (Azure Active Directory) groups datasets. I don’t have a good way to estimate those, but you can find some guidance at https://barreto.home.blog/2024/01/26/counting-microsoft-entra-id-azure-active-directory-groups . I hope that helps.

Jose

LikeLike